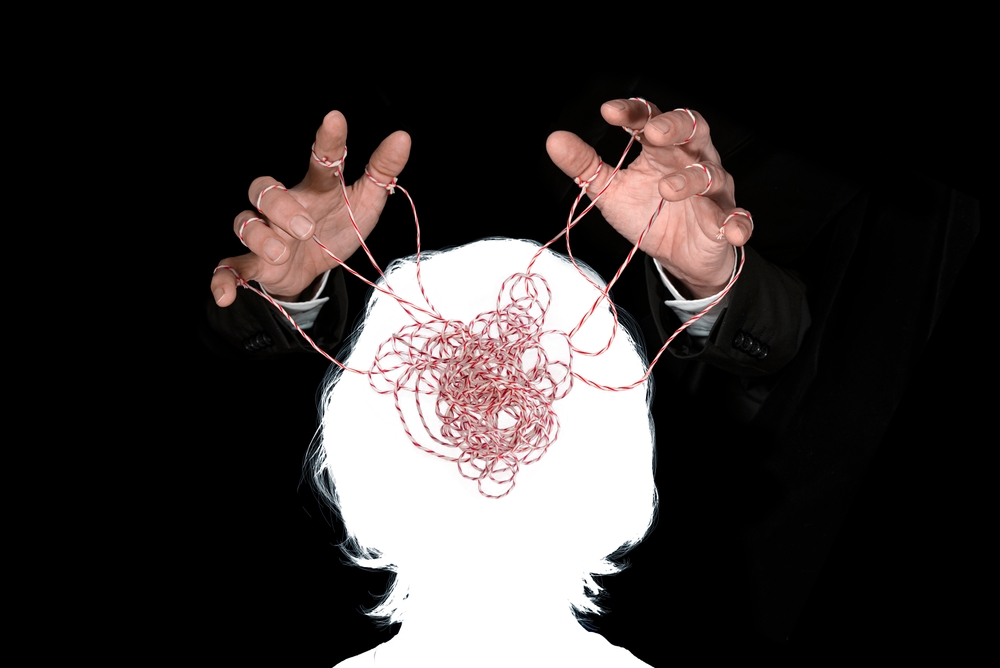

How Russia and China manipulate us on social media. RSU analyses how not to get caught

Writer: Kristofers Krūmiņš,

Project Manager, RSU China Study Centre

In recent years, the social sciences field has responded to the growing threat in the information space, including on social media, yet many questions still remain about how to exactly combat information operations by other countries.

Some of these answers, in more detail, can be found on the Horizon Europe research project website DE-CONSPIRATOR, where Dr. Una Aleksandra Bērziņa-Čerenkova, Researcher at Rīga Stradiņš University (RSU) — as part of the research team — describes the specific features of Chinese and Russian influence operations.

Although NATO has not yet highlighted cognitive warfare as a separate battlefield in its guidelines, it nonetheless exists and is particularly significant in the case of Latvia. Too often, researchers or politicians give up by repeating something about better education and critical thinking as the main line of defence. Of course, these are important factors that reduce the impact of influence operations, still it is necessary to discuss various defence solutions that have not yet gained wider public recognition. In this article, we introduce the main weapons of defence that we have identified in the course of the study. Some of them can be used by any social media user, while others are applicable at the state level.

On a global scale, China and Russia stand out as the most active perpetrators of disinformation. These countries often use very similar methods on social media to mislead or sow confusion in the countries they target, yet China and Russia have very different capabilities and ambitions when it comes to influencing the societies of Latvia or Europe as a whole. This creates a slight paradox: although Russia has, in a sense, become dependent on China due to the war in Ukraine, in terms of cognitive warfare, China is still learning from Russia’s techniques.

In order to combat this hostile duo in the information space, it is essential to understand the common features of the disinformation by these countries.

Vaccination as a metaphor

Often, when thinking about disinformation, we feel the urge to blame those who try to influence society with lies and falsehoods. However, disinformation is only as effective as we ourselves are weak. Both Russia and China exploit their opponents' weaknesses in their cognitive warfare approach, dividing society into predetermined social groups.

Russian and Chinese disinformation actors most often amplify already existing news stories created by local influencers or politicians who dislike the “established order.” These stories usually spread quickly and are influential because they always contain a bit of truth. Therefore, one should avoid lazy claims that pro-Kremlin sentiment arises only because “people are being manipulated.” Often, we ourselves give our adversaries ideas about how to break down society’s defence against external enemies.

The most effective response to attempts to destabilise our society is to diagnose our own weaknesses and not to be afraid to speak about them publicly.

This may seem counterintuitive, but protection against disinformation often works much like vaccination. Each dose of vaccine contains a small amount of the virus, against which the body develops antibodies. Likewise, in the public information process, greater emphasis should be placed on identifying the weaknesses that our enemy has not yet exploited but which could lead to a pandemic-scale disinformation campaign in the future.

There are social science experiments that demonstrate the validity of the vaccination metaphor and show that after looking at examples of disinformation, people are much better able to recognise situations where someone is trying to manipulate them. (1) At the individual level, each of us can examine striking examples of disinformation and later recognise something similar “in real life.” For instance, various game-based platforms have been developed, which would be worth using more often in public information campaigns. (2)

Raksta pilnajā versijā portāla LSM rubrikā RSU Zinātnes vēstnesis lasi:

Read the full version of the article on the LSM portal:

- Russia and China are becoming the main actors in the global disinformation space.

- The power of disinformation is based not on lies, but on the weaknesses of our own society.

- China and Russia most often amplify existing local content rather than inventing it from scratch.

- The “vaccination effect”: by seeing examples of disinformation, people become more resistant to manipulation.

- Ukraine’s experience prior to 2022 shows that preventive exposure of disinformation works.

- AI makes disinformation campaigns faster, cheaper, and harder to detect.

- Social media users tend to share content not because it is true, but because it is “interesting.”

- To protect themselves, everyone needs to be able to recognise the basic techniques of bots, trolls, and manipulation.

- Defence alone is no longer enough – there is an increasing call for the need to also consider “counterattacks.”